Content Validation Through Crowdsourcing Model

Testing of web applications, mobile applications, in fact, any usable software, and even online digital content is a non-negotiable element to its success. In actuality, with advancements in automation, especially technologies like RPA, AI ML, there is a decreasing dependency on people. But can the manual aspect of testing be completely eliminated? Surely not!

Using the crowd testing model for usability and functional testing of an application is a proven methodology. Being able to replicate the real-time user scenarios, both in terms of network bandwidth and devices, is proven leverage. In addition, the benefit is that new testers don’t know the application, just like first-time users won’t. In fact, with the entire world shifting online, the scope of work where the power of the crowd can be utilized has only increased over time.

There is now scope for even crowd testing of online interactive content. Even if we were to use RPA for automating some aspects of this testing, we would need human intervention to identify and remove the false positives. While automation can check that audio is not blank, we would need a manual tester to tell that the audio used is the correct audio or the glossary term meaning given is actually accurate and apt.

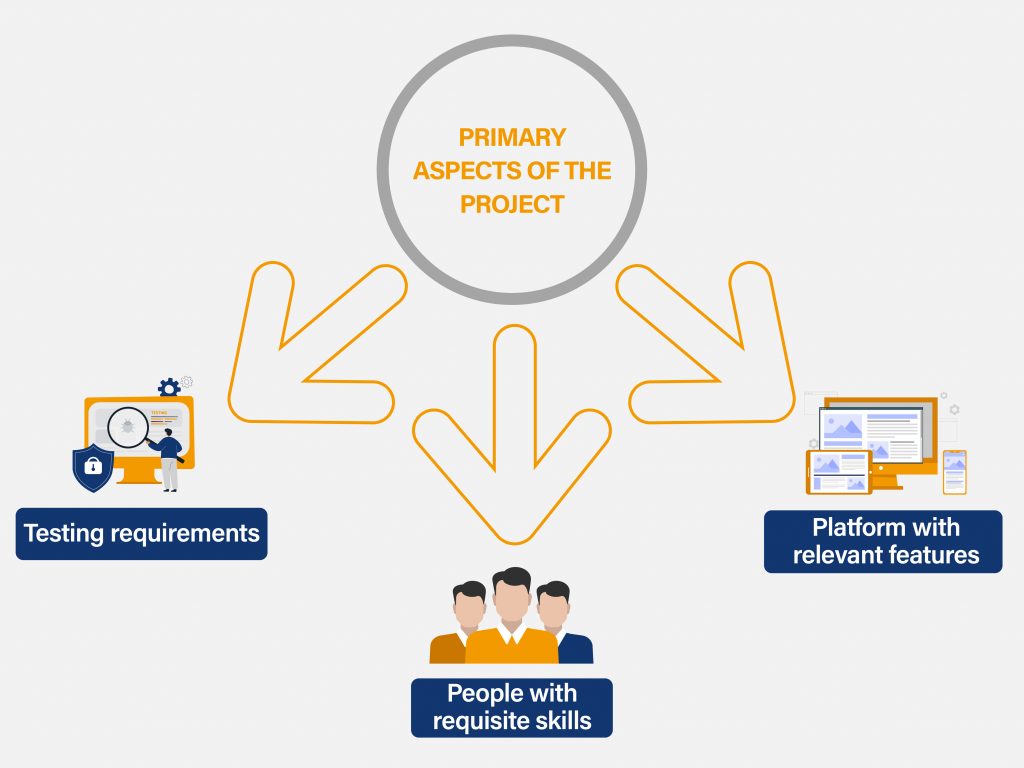

Let’s look at the three primary aspects of a project needing editorial and functional validation of online digital content. One, we need to know what such testing entails. Two, we need people who have the requisite skills. Three, we need a platform that can be the center point for finding such people and coordinating all efforts.

Below is a case study on how we used the Oprimes platform to successfully deliver a complex content validation project.

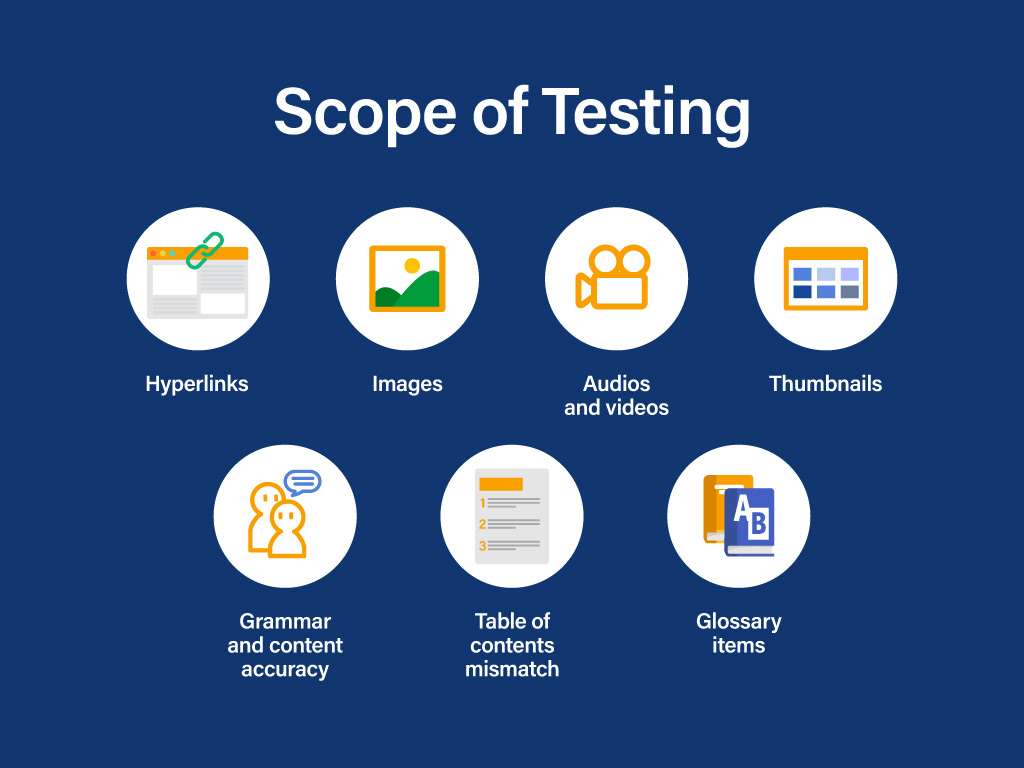

What all can be tested?

The way we read content on paper is vastly different from how we engage with content on the online medium. From being able to play around with the viewability of content, (like scrolling, zooming in and out, etc.) to the input of various supporting multi-media (like videos, tables, audio files, etc.), online content is in a different spectrum altogether. Some of the testing scopes include

-

Hyperlinks: whether they work accurately, any embedded functionality like hide/unhide is working or not

-

Images: contraction, expansion, accurate description, numbering, fuzziness or viewability, etc.

-

Audios and videos: play/ pause/ volume/ forward/ backward button/ full screen and any other buttons

-

Thumbnails: Accurate addition of thumbnails

-

Grammar and content accuracy: Spelling errors, spacing issues, truncated lines, accurate punctuation, consistent usage of titles, or breaking up of sections for higher predictability to the structure of the content

-

Table of contents mismatch: There are definite chances that there is a mismatch in the expected Table of Content or a section thereof which can impact the readership of the content

-

Glossary items: A great advantage of the online medium is the availability of definitions of difficult words on an immediate basis, but these boxes should pop up, not be blank, and be relevant and accurate

-

Other buttons, if any, should work as expected, e.g. drop-down/ radio buttons, etc.

All this and much more customization as required can be tested for online content.

Who can test?

A key benefit of content validation tasks is that an individual does not need to be a trained tester. A person who has good written and spoken communication skills in the relevant language, an eye for detail, patience, and the ability to follow specific bug logging instructions can be a tester here. The question is how can we tap into the existing skills in the market; those people who have the required knowledge and ability, and are seeking ways to augment their income

How was the Oprimes crowd-testing platform leveraged to deliver this project?

Oprimes is the Country’s leading crowdtesting platform that excels in providing premium Quality testing throughput within the optimized costing. Testcases pre-designed by experts, analysing 100s of past projects, covering key app scenarios on all areas including performance, and usability.

The platform provides a central medium for publishing the availability of such a project. On one side it allows for centrally managing all information about the project; the expectations, the scope of work, different methods for logging bugs, and the payout. On the other side, it also supports logging all information about the tester including their background, the devices they have, their contact and their account details. Once a set of testers were identified for the above project, the work was divided amongst them, and they were asked to log in bugs in the prescribed format. A thorough review helped ensure minimal to zero bug misses, and good quality logging.

The delivery and impact

The immediate project at hand was a time-bound delivery of course material for school children that needed to be done before the academic year started. Using the above method, the crowd testing revealed over 5000 bugs of various levels of severity. The quality ensured less than 5% of bug misses. This delivery helped the client ensure that the course content was accurate, optimized, and usable for the children who are expected to use this online medium as a primary source of learning in Covid times.

Let’s get started. Share your requirements and our team will get back to you with the perfect solution.