ABOUT SIRA

The State Insurance Regulatory Authority (SIRA) regulates three statutory insurance schemes in NSW:

- Compulsory Third Party (CTP) – support for people injured on NSW roads.

- Workers Compensation (WC) – support for workers with a work-related injury.

- Home Building Compensation (HBC) – protects home owners as a last resort if their builder cannot complete building work or fix defects because they have become insolvent, died, disappeared or had their license suspended for failing to comply with a court or tribunal order to compensate a homeowner.

In establishing SIRA, the Government’s intention was to create a consistent and robust framework to monitor and enforce insurance and compensation legislation in NSW, and to ensure that public outcomes are achieved in relation to injured people, policy affordability and scheme sustainability.

Newly formed Personal Injury Commission (PIC)

Dispute Resolution Services (DRS) was a department of SIRA that handled disputes between claimants and insurers for the CTP scheme (with case management functionality developed in the SIRA Salesforce org). As of 1 March 2021, a separate agency has been created to handle the disputes of both the CTP and WC schemes called the Personal Injury Commission (PIC). The DRS functions have moved to this new Agency, but are still currently using the functionality in the SIRA Salesforce org.

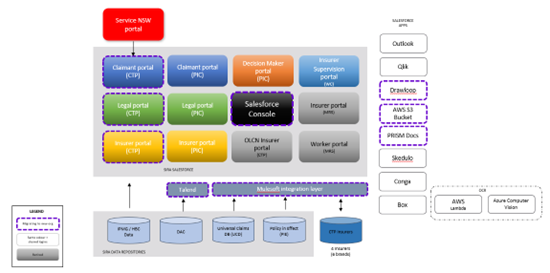

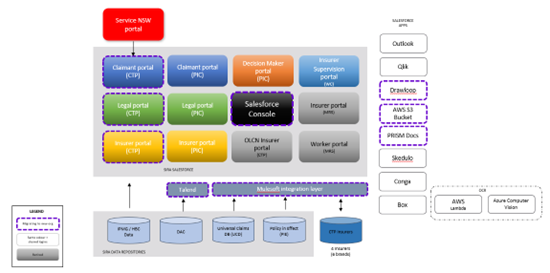

ARCHITECTURE OVERVIEW

TECHNOLOGY/TOOLS USED

The technologies/tools used to implement the proposed solutions are:

- JIRA (Used to track execution results and defects)

- Zephyr (Used to track execution of test cases)

- Confluence (User for documentation purpose)

- Tosca (User for Automation testing)

- Microsoft Teams/Slack/Webex (Communication via channels/calls/SharePoint)

- Salesforce console (Used to build different portals for testing)

- Amazon Web Services – S3 Bucket(Used to store and organize your files)

- Postman (User for API Testing)

APPROACH

The following steps were covered as part of the testing approach

- Walkthrough of the whole application along with related documentation was received by the QA team from the respective Business Analyst. The requirement was gathered during this process.

- Testing was divided into various types of testing approachese., System testing, System Integration testing and Sanity Testing. Test plan was drafted to explain the test strategy and testing activities which will be covered during the testing cycle for each sprint individually.

- Test cases were designed for all the user stories planned to pick in sprint and it was stored in a common SharePoint (Microsoft teams’ channel). These test cases were later reviewed by the respective Business Analyst to capture any missed scenarios.

- Test User Ids for different roles were created and acquired the test data was also prepared for each of the test cases as per the requirements.

- All the test cases from System Integration testing suite for the relevant scenarios were executed in two different testing cycles i.e., System Integration Testing and User Acceptance Testing. All the testing effort was tracked in JIRA/Zephyr.

- All the defects were fixed and retested. The results are tracked in Jira.

- A defect triage was planned on a weekly basis to discuss and explain the defects and revisit priority of each of the reported issues.

- Regression testing and Exploratory testing was done at the end of testing cycle to make sure there are no regression defects after the defect fixes.

- A Daily System Integration Test Summary Report was created from the Jira/Zephyr covering all the test cases executed. The report explicitly gives the count for test cases passed, failed, or blocked. Also, it has details of all the open defects

RESULTS

- 200 defects were logged as part of the testing cycle

- 1580 test cases were designed as part of this project

- 25 Test Scenarios were designed Sanity Shakedown Suite as part of this project

- 101 automationtest scripts were designed on Tosca Commander as part of this project

- Approximately 83 test cases were executed in multiple test execution cycles including regression testing

- A lot of edge case scenarios which was not previously covered were exposed as part of exploratory testing

BEST PRACTICES

The following best practices were implemented

- Creation of Master Test Suite for the whole application covering all end-to-end scenarios.

- The test cases were reviewed by the Business Analyst for all the user stories before the execution phase to cover any missed scenarios.

- Defect triage was planned on a weekly basis.

- Daily status report was sent to the whole team describing the defects logged each day and count of test cases executed/created.

- Weekly status report was sent to the client mentioning all the covered activity and planned activities for upcoming week.

- Traceability was maintained through Jira/Zephyr for easy tracking defects to as test case and hence tracking it to the User stories

- Daily Collaboration discussion meeting was held between testing team and Dev team to gather updates and resolve any confusion regarding the defects.